pp. 57-65

RakeshKumar Bhujade1*, Amitkant Pandit2 and Naveen Hemrajani3

Suresh GyanVihar University, Jagatpura , Rajasthan, INDIA SECE, Shri Mata Vaishno Devi University, Jammu, INDIA JECRCUniversity, Jaipur, Rajasthan

*Corresponding Author email : rakesh.bhujade@gmail.com

ABSTRACT

Advancement in artificial intelligence has lead to the developments of various “smart” devices. The biggest challenge in the field of image processing is to recognize documents both in printed and handwritten format. Character recognition is one of the most widely used biometric traits for authentication of person as well as document. Character Recognition Procedure is a type of document image analysis where scanned digital image that contains either machine printed or handwritten script input into an Character Recognition Procedure software engine and translating it into an editable machine readable digital text format. A Neural network is designed to model the way in which the brain performs a particular task or function of interest. The Multilayer Back propagation Neural Network for efficient recognition can be implemented where the errors were corrected through back propagation and rectified neuron values were transmitted by feed-forward method in the neural network of multiplehidden layers. The main focus is kept on the Proportionality of hidden layer for getting maximum accuracy with less time in training and testing.

Keywords: Back Propagation Neural Network (BPNN)

INTRODUCTION

Computer Vision Applications largely depends on the Human Computer Interface (HCI). Over the last few years with the increase in the necessity of the development of an efficient HCI system, the quest for an easier way to interact with a computer has been a matter of concern. Automated character recognition system have gained popularity for its application in Computer Vision, Handwriting recognition, text based decision making and Intelligent text recognition systems. Though many handwritten character recognition system has been designed over the last few years most of them includes off line processing. But to operate a computer in a real time environment the limitation in processing time makes it difficult. This paper deals with the real time detection of numeric characters based on the finger tip movements in a real time application. Character recognition system finds its application in many major applications such as license plate recognition systems [1].

Hand writing recognition systems [3]. In the real time environments most of the algorithms uses the gesture from any part of our body [6]. Characters are also separated from the natural events described in [8]. The segmentation process is important for the recognition of the characters. There are many segmentation based techniques such as [10].There are two type of recognition system, one is using a classifier [13] and the other is without using a classifier.

The character recognition systems are not concentrated in the English characters only but also for the more complex languages such as Tamil [16], Devnagari [17], China languages [18] and also for Bengali language [19]. In this paper a new approach for the recognition of numeric character recognition method has been proposed where a red colored fingertip is used for the handwriting of the characters. The line joining the first two tip positions is treated as the reference axis. Then each of the next tip positions is determined and angular variations from the reference axis are determined from the tip positions.

The hand movements are directed toward different directions and the angular deviations from the reference axis are not same for different numeric characters. Suppose the angular variation for the writing of 1 is almost 0o. The deviation for 2 is different from the angular deviation of 3, 4 or any other characters. These variation leads to the classification criterion for the characters and make each characters different from another. This variation of angular movements up to a particular number of frames depending on the frame rate at which the video is taken is used to train a feed forward neural network. This trained neural network is later used for the final recognition of the characters.

Character Recognition Procedure

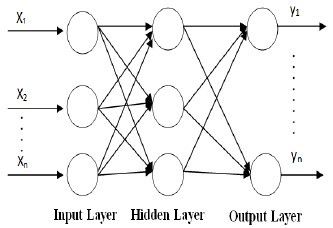

- Pre-processing:– The pre-processing stage yields a clean document in the sense that maximal shape information with maximal compression and minimal noise on normalized image is obtained.

- Segmentation: – Segmentation is an important stage because the extent one can reach in separation of words, lines or characters directly affects the recognition rate of the script.

- Feature extraction:- After segmenting the character, extraction of feature like height, width, horizontal line, vertical line, and top and bottom detection is don

- Classification:- For classification or recognition back propagation algorithm is used. Output:-Output is saved in form of text format.

Fig 1.1: Character recognition steps

Neural Network

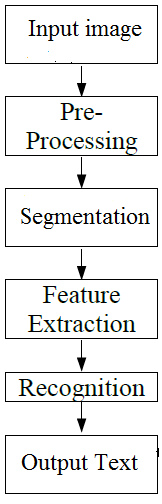

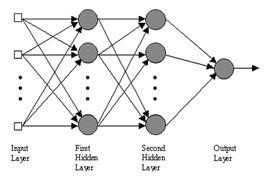

Neural network serves as the classifier in our proposed method. A Multi Layer Perception (MLP) has been used for the mapping of input features to the output numeric characters. The neural network is a 3 layer feed forward supervised network as shown in Fig. 1.2 where denotes the input attributes and to denote the output attributes. The e network has one hidden layer consists of 158 neurons and one output layer which has 10 neurons as the number of output is 10. The hidden layer uses log sigmoid activation function. T he network is fully connected and back propagation algorithm is used. The network was trained with the number of input that has been determined experimentally.

Fig1.2: Basic structure of Neural Network

Artificial Neural Network

Artificial neural networks (ANNs) motivated by an animal’s central nervous systems which has the ability of equipment learning and pattern detection. It resembles the brain of its ability to adapt learning process and the knowledge can also be stored in synaptic weights which are the interconnections of ANNs are typically represented as systems of unified neurons which can calculate values from the given inputs [9]. Learning of ANN means a technique by which the free constraints of any neural network are adjusted by stimulation by the surroundings in which the network is surrounded.

The category of learning is decided by the way in which the parameter alters. Mainly learning can be categorized into: Supervised Learning:Here in this type of learning we assumes the availability of a labeled set of training data made up of N input-output. Unsupervised learning:In this form of learning we do not assume the availability of a set of training data made up of N input-output. They study to organize contribution vectors according to how they are grouped spatially and try to tune its network by considering a neighborhood [10].

The main areas where ANNs cooperate a main function are:

- Computer numerical control.

- Classification i.e. Pattern and sequence detection.

- Data processing which consists ofcompression, clustering, filtering et

- Robotics

- Purpose approximation.

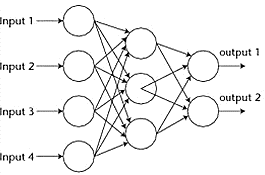

In ANN in a row is for all time stored in a number of different parameters, which container be adjusted byutilize or can be taught by supplying the ANN among samples of input along with chosen output. The outline under shows a simple ANN:

Fig 1.3: Neural network system

Types of Neural Networks

Multi-Layer Feed-forward Neural Networks

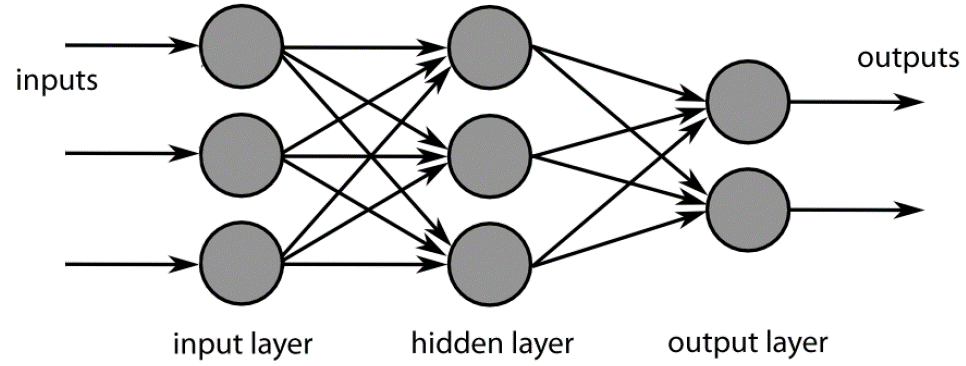

Feed-forward ANNs transmits signals in one way only i.e. from input to output. Advice system is not to be had in such type of networks or theseare capable of say that the output is self-determining. A feed-forward network emerges to be straight ahead networks that correlate inputs through outputs. These categories of networks are generally utilized for pattern detection.

Fig 1.4: Three layered neural network

In a three-layer network, the first layer known as input layer connects the input variables. The last layer which is known as output layer. The layers present in between the input layer and output layer are known as the hidden layer. In an ANN we can utilize more than one hidden layer.

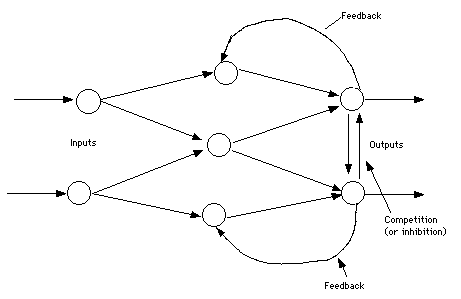

Multi-Layer Feed-back Neural Networks

In a feedback networks the signals can travel directionally by using loops in the network. Feedback networks are a bit complicated and at the same time very dominant. Feedback networks are dynamic i.e. their state changes constantly till they reach a stability point. They stay at the Stable point till the input changes and a new stability wants to be established.

Criticism neural network are as well known to be communicative or persistent, still though the last term is often utilized to represent feedback relations in single- layer organizations.

Fig 1.5: Feedback neural network

This type of network comprises of two condition First one is the forward phase were the activation spread from the input layer to output layer. The second phase is the backward phase where the difference between the detected actual value and the minimal value in the output layer is circulated backwards so that it can amend the values of weights and bias values.

Advantages of Neural Networks

- ANN has much like a nervous system. The system is organized parallel so that it can give solutions to problems where we have to satisfy multiple constraints at the same tim

- In ANN what the system learns is distributed to the whole network.

- It is easier to handle and less prone to nois

- They have the tendency to recapitulat

- They can solve complex problems also which are not easy for humans.

- Chance of error is less and a small change in input does not affect the output.

SYSTEM MODULE

Back-propagation algorithm

The back-propagation learning algorithm has mainly two steps: i) propagation ii) weight update.

Propagation is of two types:

- Forward propagation- In forward propagation function signals flow from input layer to hidden and output layers. Forward propagation of training input is used to generate the propagation output activations.

- Backward propagation- In backward propagation error signals propagate from output to hidden and input layers. Backward propagation is used to generate details of all output and hidden neurons in input layer. It is used as feedback in input layers. This type of propagation generally has higher accuracy compared to forward propagation because here comparison is done with the desired output and the error present if any is corrected by updating the weights.

Weight update is done by the following steps a) multiply local gradient and input signal of neuron b) subtract a portion of the gradient from the weight. It can be expressed by the following formula

Fig 2.1: Back Propagation at the last node

Steps of the backpropagation algorithm

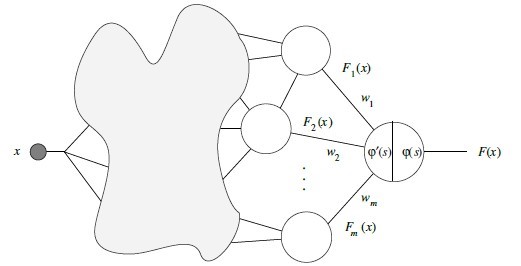

We can now formulate the complete backpropagation algorithm and prove by induction that it works in arbitrary feed-forward networks with differentiable activation functions at the nodes. We assume that we are dealing with a network with a single input and a single output unit. That Algorithm is the

The derivative of F at x is thus

Backpropagation algorithm. Consider a network with a single real input x and network function F. The derivative F0(x) is computed in two phases: Feed- forward: the input x is fed keen on the network. The outmoded functions at the nodes and their derivatives are evaluated at each node. The derivatives are stored. Backpropagation: the constant 1 is fed into the output unit and the network is run backwards. External in sequence to a node is added and the result is multiplied by the value stored in the left part of the element. The consequence is transmitted to the left of the element. The consequence collected at the input unit is the derivative of the network function with respect to x. The following proposition shows that the algorithm is correct. Proposition [11] Algorithm computes the derivative of the network function F with respect to the input x correctly.

The units in parallel and also for weighted edges. Let us make the induction assumption that the algorithm works for any feed-forward network with n or fewer nodes. The feed-forward step is executed first and the result of the single output unit is the network function F evaluated at x. Assume that m units, whose respective outputs are F1(x), . . . , Fm(x) are connected to the output unit. Since the primitive function of the output unit is ‘, we know that

Where s = ‘(w1F1(x) + w2F2(x) + · · · + wmFm(x)). The subgraph of the main graph which includes all possible paths from the input unit to the unit whose output is F1(x) defines a subnetwork whose network function is F1 and which consists of n or fewer units. By the induction assumption we can calculate the derivative of F1 at x, by introducing a 1 into the unit and running the subnetwork backwards. The same can be done with the units whose outputs are F2(x), . . . , Fm(x). If instead of 1 we introduce the constant ‘0(s) and multiply it by w1 we get w1F01(x)’0(s) at the input unit in the backpropagation step. Similarly we get w2F02 (x)’0(s), . . . ,wmF0m (x)’0(s) for the rest of the units. In the backpropagation step with the whole network w add these m results and we finally get

which is the derivative of F evaluated at x. Note that introducing the constants w1’0(s), . . . ,wm’0(s) into the m units connected to the output unit can be done by introducing a 1 into the output unit, multiplying by the stored value ‘0(s) and distributing the result to the m units through the edges with weights w1,w2, . . . ,wm. The fact running network backwards as the backpropagation algorithm demands. This means that the algorithm works with networks of n + 1 nodes and this concludes the proof.

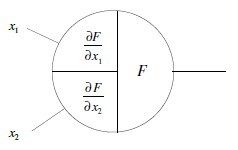

Implicit in the above analysis is that all inputs to a node are added before the one-dimensional activation function is computed. We can consider also activation functions f of several variables, but in this case the left side of the unit stores all partial derivatives of f with respect to each variable. Fig2.2 shows an example for a function f of two variables x1 and x2, delivered through two different edges. In the backpropagation step each stored partial derivative is multiplied by the traversing value at the node and transmitted to the left through its own edge. It is easy to see that backpropagation still works in this more general case.

The multilayer perceptron neural networks with the EBP algorithm have been applied to the wide variety of problems. In this paper, two-layer perceptron i.e., one hidden layer and one output layer has been used [5]. Structure of MLP network for English character recognition is shown in Fig.

Fig 2.3: Multilayer Perceptron Network (MLPN)

In MLPN with Back Propagation training algorithm, the procedure and calculations as follows:

derivatives at a node

The backpropagation algorithm also works correctly for networks with more than one input unit in which several independent variables are involved. In a network with two inputs for example, where the independent variables x1 and x2 are fed into the network, the network result can be called F(x1, x2). The network function now has two arguments and we can compute the partial derivative of F with respect to x1 or x2. The feed-forward step remains unchanged and all left side slots of the units are filled as usual. However, in the backpropagation step we can identify two subnetworks: one consists of all paths connecting the first input unit to the output unit and another of all paths from the second input unit to the output unit. By applying the backpropagation step in the first subnetwork we get the partial derivative of F with respect to x1 at the first input unit. The backpropagation step on the second subnetwork yields the partial derivative of F with respect to x2 at the second input unit. Note that we can overlap both computations and perform a single backpropagation step over the whole network. We still get the same results.

Multilayer Perceptron Network

Where is the output of unit i, is the weight from unit i to unit j. The generalized delta rule algorithm was used to update the weights of the neural network in order to minimize the cost function:

where and are the desired and actual values in that order, of the output unit k and training join up p. union is achieved by updating the weights using following formulas:

Where h is the learning rate, a is the impetus, Wij(n) is the load from hidden node i or from an input to node j at nth iteration, Xi is the each output of unit i or is an input, and djis an error term for unit j [1]. If unit j is an output unit, then

If unit j is an internal unknown unit, after that Fourier Descript is complicated in finding the Discrete Fourier coefficients a[k] and b[k] for

Fourier coefficients derived according to equations (1) and (2) are not rotational or shift invariant but Fourier Descriptors that have the invariant property with respect to rotation and shift,the following operations are defined. For each n compute a set of invariant descriptors r(n)

Computing a new set of descript s(n) by reducing the size of character from r(n)

a(n), b(n) and invariant descriptors s(n), n = 1,2,…..(L –

- were derived for all of the characters.

LITERATURE REVIEW

In the year 2014,Ramzi, A. and Zahary, A., [1] investigatedand Study of performing online handwriting recognition for Arabic character using back propagation neural network and it experiments the performance of it using online features of characters as input to the BPNN in comparison with combining online and offline character features as the input.

In the year 2013 Phienthrakul, T.and Chevakulmongkol, W. [2] presented a handwritten recognition system on Pali cards of BuddhadasaIndapanno. The planned scheme composes of 4 main procedure, image pre-processing, nature segmentation, mark mining, and character acknowledgment. After that, these characters are recognized by feedforward back-propagation neural network.

In 2013,Selvi, P.P. andMeyyappan, T., [3] proposed a method to recognize Arabic numerals using back propagation neural system. Arabic digits are the ten digits that were descended from the Indian numeral system. The recognition phase recognizes the numerals precisely. The prospect technique is implemented with Matlab coding. Model handwritten descriptions are tested with the proposed method and the results are plotted.

In 2013, Sahu, N. and Raman, N.K., [4]investigatedthe Character recognition systems for various languages and script has gain importance in recent decades and is the area of deep interest for a lot of researchers. Their growth is strongly integrated with Neural Networks. In 2012,Nguang Sing Ping; Yusoff, M.A., [5] described the application of 13-point feature of skeleton for an image-to-character credit. The representation can be a scanned handwritten character or drawn character from any graphic designing tool like Windows Paint clash. The representation is processed through conventional and 13-point feature of skeleton methods to extract the raw data.

In 2012 Pradeep, J., Srinivasan, E. andHimavathi, S., [6] presented an off-line handwritten English character recognition system using hybrid feature extraction technique and neural network classifiers are proposed. Neural Network (NN) topologies, namely, rear spread neural network and radial basis function network are built to classify the font. The k-nearest neighbour network is also built for evaluation. The nosh onward NN topology exhibits the highest recognition accuracy and is identified to be the most suitable classifier.

PROBLEM FORMULATION

The primary steps involved in any character recognition method consist of pre-methoding, feature extraction and classification. The most basic step is the pre-methoding step which itself consists of many steps such as Binarization, Inversion, thinning, segmentation, noise reduction. A brief idea was also taken on the difference between on-line and off-line character acknowledgment methods various research papers pertaining to feature extraction methods were gone during. The features are important for efficient acknowledgment method. The better the features extracted the better will be the result. There are many methods for this method but we have to choose our method properly based on our input personality. Then comes the method of classification and for the same we utilize classifiers and only give our final output. There are many types of classifiers which were studied amongst them were hidden and Back- propagation Neural Network (BPNN).

PROPOSED METHODOLOGY

It has been observed in character recognition problems and also in other pattern recognition problems that, in many cases, the distinguishing features are located in small parts of a group of similar characters while the other parts are indistinguishable. These smaller parts containing the distinguishing features can be discriminated with lower order moments in comparison to the order of moments needed for the complete images. We have explored by using the multi-layers neural network this observation in the recognition of handwritten characters of the alpha-numerals and found that considerable improvement can be achieved in the recognition of characters through the proposed method.

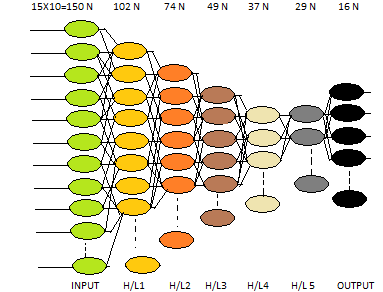

Fig Proposed five Hidden Layers BP-NN Architecture

The neural network architecture that has been used in this work is shown in the Fig. 2. It is a five hidden layer back propagation network, fully interconnected with different weights. The input layer consists of 150 neurons because this is assumed that every numeral has the size of 15×10. The inputs to the network are bit patterns taken in binary form viz, 0, 1. These bit patterns are so chosen as they correspond to certain characters e.g. All the boxes in the matrix are numbered from 1 to 35 as shown in the Fig.

The proposed learning algorithm of 5 hidden layers BP- NN is explained below:

- Declare all the variable to create simulation environment

- Pre-process the template image of handwritten numerals to train the neural network

- Create the neural network with proposed weights and neurons and initialize with proposed modes.

- Start training of neural network with 5 hidden layers

- The training will be repeat for given number of epochs

- Compare the network output with the target output and keep repeating the training process until the targeted output.

- After achievement of targeted output save the neural network for further recognition process.

The recognition algorithm for five hidden layer BP-NN approach:

- Load the trained neural network for 5 hidden layers.

- Browse handwritten numerals image for recognition.

- Select area of image is to recognition

- Pre-process image after selection.

- Start comparison process using neural network.

- After recognition display the recognized numerals.

The character recognition application described in this paper has been implemented in. There are two phases of the work.

- Training Phase

- Testing Phase

Training Phase

In the training phase, the neural network system is provided with information patterns. The patterns are in the form of 10*10 matrix. Each element of the matrix can have only one value either a 0 or a 1. For example, the alphabet E can be represented as:

Testing Phase

In the testing phase, the patterns for the alphabets, numbers or symbols are entered into the system in either correct form of having some errors in the element values. These patterns are compared with the patterns in the knowledge base that was prepared by the training phase of the work. It recognizes the patterns by comparing the weights of every element of the pattern matrix with the corresponding pattern matrix of the knowledge base. The no. of iterations depends on the choice of number of hidden layers.

CONCLUSIONS

Character recognition is the most important process for humans and machine interface. A lot of research is going on this area. Character recognition is very essential as slowly and steadily the world is becoming automated and character recognition helps in reducing human work load. A lot of research has been done on English language and various techniques have been studied.Multi layersneural network has been the area of interest. The motive of this research work was to develop handwrittenalphanumeric recognition system. The motive was to recognize.Here we have proposed to use the multi layer neural network architecture for two types of features first one was contour based and second one was moment based. Prior to that basic morphological operation were performed to make the data fit for feature extraction.

REFERENCES

[1]. Ramzi, A.; Zahary, A., “Online Arabic handwritten character recognition using online-offline feature extraction and back-propagation neural network,” Advanced Technologies for Signal and Image Processing (ATSIP), 2014 1st International Conference on , vol., no., pp.350,355, 17-19 March 2014.

[2]. Phienthrakul, T.; Chevakulmongkol, W., “Handwritten recognition on Pali cards of BuddhadasaIndapanno,” Computer Science and Engineering Conference (ICSEC), 2013 International, vol., no., pp.191,195, 4-6 Sept. 2013.

[3]. Selvi, P.P.; Meyyappan, T., “Recognition of Arabic numerals with grouping and ungrouping using back propagation neural network,” Pattern Recognition, Informatics and Mobile Engineering (PRIME), 2013 International Conference on , vol., no., pp.322,327, 21- 22 Feb. 2013.

[4]. Sahu, N.; Raman, N.K., “An efficient handwritten Devnagari character recognition system using neural network,” Automation, Computing, Communication, Control and Compressed Sensing (iMac4s), 2013 International Multi-Conference on , vol., no., pp.173,177, 22-23 March 2013.

[5]. Nguang Sing Ping; Yusoff, M.A., “Application of 13-point feature of skeleton to neural networks-based character recognition,” Computer & Information Science (ICCIS), 2012 International Conference on , vol.1, no., pp.447,452, 12-14 June 2012.

[6]. Pradeep, J.; Srinivasan, E.; Himavathi, S., “Performance analysis of hybrid feature extraction technique for recognizing English handwritten characters,” Information and Communication Technologies (WICT), 2012 World Congress on , vol., no., pp.373,377, Oct. 30 2012-Nov. 2 2012.

[7]. Jia-ShingSheu; Guo-Shing Huang; Ya-Ling Huang, “Design an Interactive User Interface with Integration of Dynamic Gesture and Handwritten Numeral Recognition,” Computer, Consumer and Control (IS3C), 2014 International Symposium on , vol., no., pp.1295,1298, 10-12 June 2014.

[8]. Ouchtati, S.; Redjimi, M.; Bedda, M., “Realization of an offline system for the recognition of the handwritten numeric chains,” Information Systems and Technologies (CISTI), 2014 9th Iberian Conference on , vol., no., pp.1,6, 18-21 June 2014.

[9]. Structure for Hand Written Digit Recognition,” Service Systems and Service Management (ICSSSM), 2013 10th International Conference on , vol., no., pp.18,22, 17-19 July 2013.

[10]. Zhu Dan; Chen Xu, “The Recognition of Handwritten Digits Based on BP Neural Network and the Implementation on Android,” Intelligent System Design and Engineering Applications (ISDEA), 2013 Third International Conference on , vol., no., pp.1498,1501, 16-18 Jan. 2013.

[11] V.K. Govindan and A.P. Shivaprasad, “Character Recognition – A review,” Pattern Recognition, Vol. 23, no. 7, pp. 671- 683, 1990.

[12] PlamondonRejean, Sargur N. Srihari, “On line and off line handwriting recognition: A comprehensive survey”, IEEE Transactions on PAMI, vol. 22, No. 1, 2000.

[13] Anil K. Jain, Robert P. W. Duin, Jianchang Mao, “Statistical Pattern Recognition:A Review”, IEEE Transactions on PAMI, Vol. 22, No. 1, pp. 4-37, January 2000.

[14] R. O. Duda, P. E. Hart, D. G. Stork, “Pattern Classification”, second ed., Wiley- Interscience, New York 2001.

[15] R.G. Casey and E.Lecolinet, “A Survey of Methods and Strategies in Character Segmentation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 18, No.7, July 1996, pp. 690-706

[16] C.-L. Liu, K. Nakashima, H. Sako, andH. Fujisawa, “Handwritten digit recognition: Bench- marking of state-of-the-art techniques”, Pattern Recognition. 36 (10), 2271–2285, 2003.

[17] A. Rajavelu, M. T. Musavi, and M. V. Shirvaikar, “ANeural Network Approach to Character Recognition,” Neural Networks, 2, pp. 387-393, 1989.

[18] J. X. Dong, A. Krzyzak, C. Y. Suen, “An improved handwritten Chinese character recognition system using support vector machine”, Pattern Recognition Letters. 26(12), 1849–1856, 2005

[19] S.C.W. Ong, S. Ranganath, “Automatic sign language analysis: a survey and the future beyond lexical meaning”, IEEE Transactions on Pattern Analysis and Machine Intelligence 27 (6) (2005) 873– 891.

[20] D. S. Zhang and G. Lu, “A comparative study on shape retrieval using fourier descriptors with different shape signatures”, in Proc. International Conference on Intelligent Multimedia and Distance Education (ICIMADE01), 2001

[21] Rafael C. Gonzalez, Richard E. woods and Steven L.Eddins, “Digital Image Processing using MATLAB”, Pearson Education, Dorling Kindersley, South Asia, 2004.

[22] Y. Kimura, T. Wakahara, and A. Tomono, “Combination of statistical and neural classifiers for a high-accuracy recognition of large character sets”, Transactions of IEICE Japan.J83-D-II (10), 1986–1994, 2000 (in Japanese).